Hiding in the Light

A pioneering team from Cornell University has developed an innovative method to watermark videos, addressing previous limitations in detecting manipulated content. Earlier efforts focused on examining specific pixel changes to ascertain whether a video had been altered. However, this approach was limited by the requirement for knowledge about the camera or AI model used to create the video.

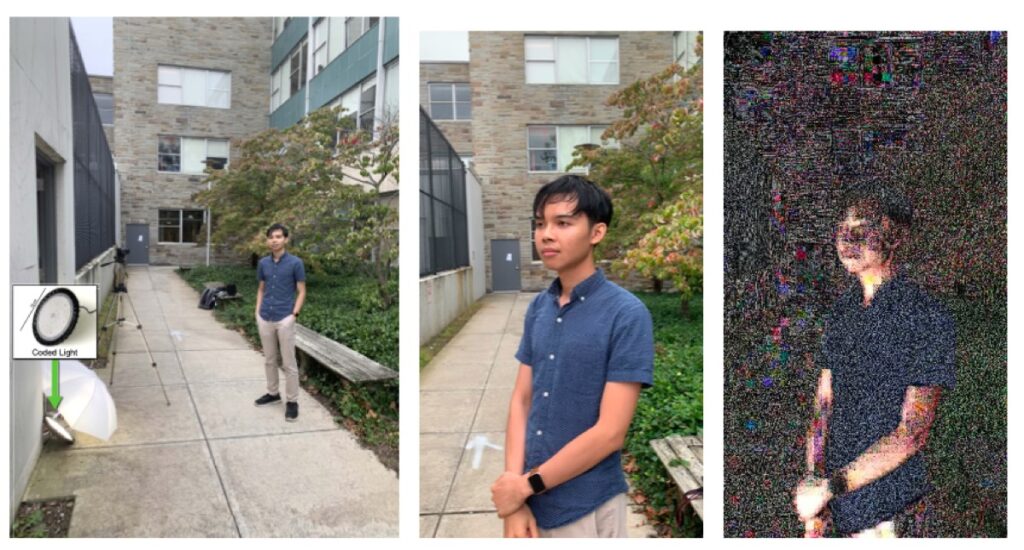

The new technique, known as “noise-coded illumination” (NCI), effectively embeds watermarks within the natural noise of light sources. This method is versatile and can be applied using a straightforward software for computer displays and specific lighting arrangements. Additionally, consumer-grade lamps can be modified with a small computer chip to implement this system.

According to Davis, a researcher involved in the study, “Each watermark includes a low-fidelity, time-stamped version of the original video captured under varying lighting conditions. We refer to these as code videos.” The presence of these code videos allows for the detection of discrepancies when alterations are made to the original video. When attempts are made to generate fake content using AI, the outcome can appear as mere random variations, complicating the identification process. The watermark’s design as noise makes it particularly challenging to detect without prior knowledge of the embedded code.

The Cornell team’s technique was rigorously tested against numerous forms of video manipulation such as speed changes, compositing, and deep fakes. The results demonstrated impressive resilience to conditions including low signal levels, motion from the camera or subject, various skin tones, and fluctuating levels of video compression, across both indoor and outdoor environments.

Davis emphasized the robustness of their approach, stating, “Even if a malicious actor becomes aware of this technique and deduces the codes, their task becomes significantly more complex. Rather than fabricating the light for a single video, they would need to recreate code videos separately, ensuring coherence among all fakes.” He acknowledged the ongoing challenges in the field, noting that the issue of video manipulation will continue to grow in complexity over time.

DOI: ACM Transactions on Graphics, 2025. 10.1145/3742892 (About DOIs).